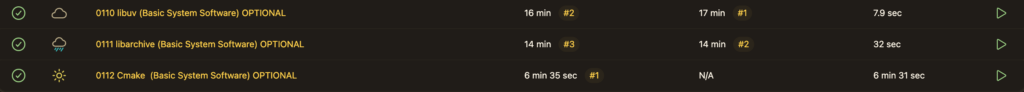

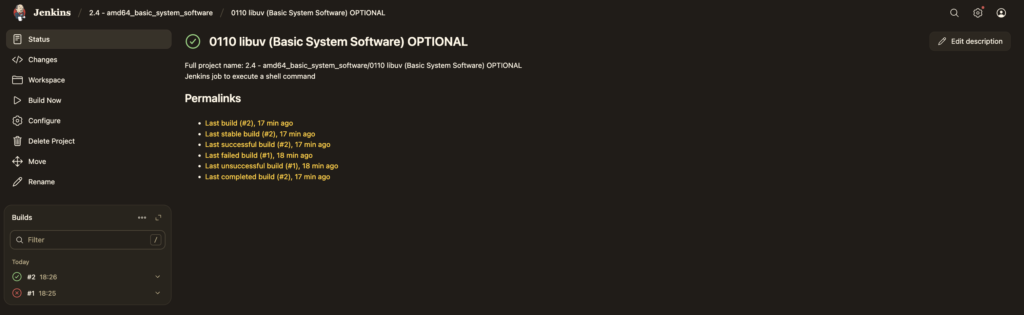

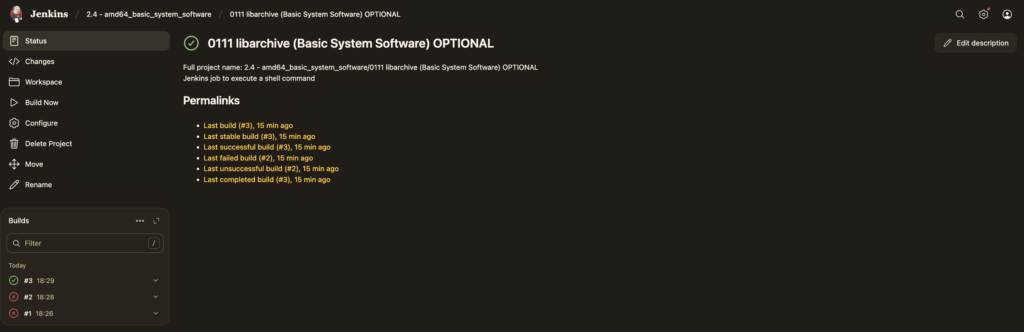

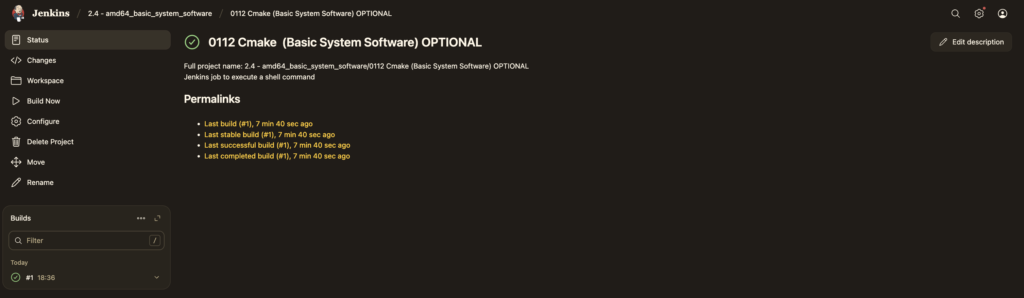

It is time to add some GenAI tools to my Linux distribution to experiment with the throughput I can achieve on a minimal system. I don’t have a GPU yet, so I will use llama.cpp, which seems more practical than vLLM for CPU-only setups. First, I need to compile a few dependencies (libuv, libarchive, and CMake). As described in the llama.cpp documentation, CMake is required to build the project.

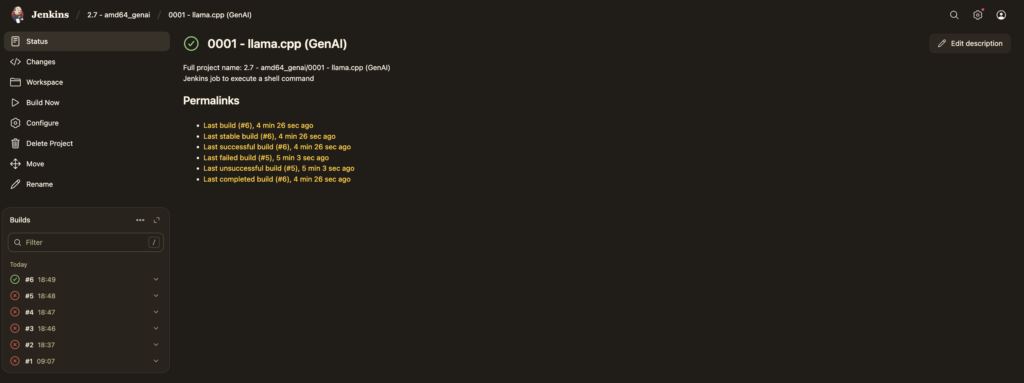

I have prepare several Jenkins jobs via Ansible…

Chroot into the system to ensure the CMake binary is available

ubuntu@ns3137793:~/gdt$ sudo bash ./jenkins-lfs/chroot_in.sh

(lfs chroot) root:/# find / -name "cmake" -type f

/sources/cmake-4.2.1/Bootstrap.cmk/cmake

/sources/cmake-4.2.1/bin/cmakeBuild llama.cpp

I’m now ready to load a lightweight model and launch llama-server. To conserve resources, I’ve bypassed systemd and written a custom init script to handle the startup process.

This is a video of an example of how to build an ISO, boot it, and test local AI inference.

I’m working on this repo: https://github.com/lucky-sideburn/generic-distro-toolkit. Anyone who wants to join is more than welcome!