Recentemente mi sono imbattuto in un articolo pubblicato su thenewstack.io in cui viene dichiarata la fine di una cultura e di un insieme di metodologie e tools sotto il nome di DevOps. Ora, è chiaro che vorrei evitare l’accollo di cambiare nome al mio blog (magari con una carta di accollo su https://accolli.it/). Perorerò la causa del “DevOps is not dead” ma senza essere “legacy” o seguendo uno spirito di conservazione (perché il mercato e le community hanno giustamente bisogno di novità).

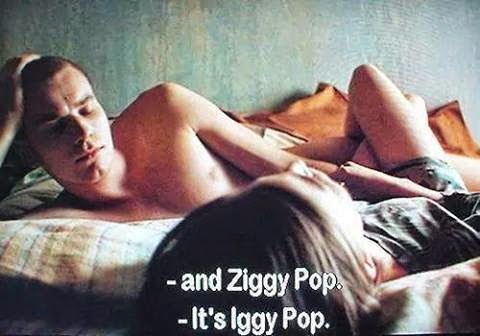

Quando leggo articoli come https://thenewstack.io/devops-is-dead-embrace-platform-engineering/ mi viene in mente sempre la stessa scena di Trainspotting ovvero questa qui: https://youtu.be/rKejgcqXLO0?t=4

Dianne: Non è che ringiovanisci, Mark. Il mondo sta cambiando. La musica cambia, anche le droghe cambiano. Non puoi startene qui tutto il giorno a pensare all’eroina e a Ziggy Pop.

Mark: È Iggy Pop…

Dianne: Quello là, insomma, tanto ormai è morto.

Mark: No, no, non è morto. Ha fatto una tournée l’anno scorso, Tommy è andato a vederlo.

Dianne: Il punto è che ti devi trovare qualcosa di nuovo.

Esatto, proprio quella scena in cui Dianne cerca di far sentire vecchio Mark 😀

Rimanere fermi e non affacciarsi al nuovo è problematico perchè c’è il rischio di imboccare strade leggermente diverse dalle direzioni stabilite nelle varie community, e nel bellissimo mondo dell’open-source, distaccarsi dalle community incrementa sicuramente l’effort nello sviluppo di nuove soluzioni e nell’affrontare tematiche come sicurezza e innovazione.

Tempo fa, realizzai un progetto per capire quali erano le tematiche più presenti nel contesto in cui lavoravo. Il sistema era molto semplice, c’era una mailbox che avevo “joinato” a moltissime newsletters, portali con offerte di lavoro, blog tecnici di qualsiasi taglio e genere. Il risultato fu interessante e lo raccontai qui. Dopo aver letto l’articolo di thenewstack.io mi è venuto in mente di riprendere tale analisi mettendo in relazione SRE, DevOps e Platform Engineer per un periodo di tempo abbastanza lungo (forse lo farò…).

Di seguito i punti che ho preso e analizzato dall’articolo “DevOps Is Dead. Embrace Platform Engineering”.

Punto 1

Developers don’t want to do operations anymore, and that’s a bad sign for DevOps, at least according to this article by Scott Carey and this Twitter thread by Sid Palas.

Mi immagino legioni di DevOps che obbligano developer a ricompilare il kernel in pausa pranzo. È chiaro che il focus è alleggerire qualcuno dal rumore di fondo che non gli permette di accelerare sui suoi obiettivi. È stato sempre questo lo scopo di chi gestisce sistemi, automazioni e sistemi di CI/CD.

Punto 2

To be fair, it depends on who you ask. This Reddit thread highlights developers’ strong and often conflicting opinions about the “you build it, you run it” paradigm. Some developers think it’s beneficial, even sometimes necessary, that they take over operations tasks. Others don’t want to touch operations at all. Still, others are indifferent.

Questo è semplicemente una questione di attitudini e di organizzazione dei team. Sei un dev a cui fa piacere utilizzare Kubernetes o entrare in “vi” per configurare cose? Bene, se c’è la volontà di farlo è tutto bagaglio culturale.

Punto 3

Both are necessary: Self-service capabilities are essential to moving quickly and efficiently. But with the complexity of the modern cloud native world, freedom without appropriate boundaries creates too much pressure and becomes counterproductive.

Punto interessante. Sicuramente, un occhio di chi lavora sulla gestione e implementazione di architetture cloud può essere di aiuto per evitare deragliamenti in ambito sicurezza, budget, tuning, …

Punto 4

Cos’è il Platform Engineering?

“The discipline of designing and building toolchains and workflows that enable self-service capabilities for software engineering organizations in the cloud native era. Platform engineers provide an integrated product most often referred to as an “internal developer platform” covering the operational necessities of the entire life cycle of an application.”

Questo è un aspetto molto interessante. Diciamo che creare strumenti di provisioning automatico utili agli sviluppatori è un tema che affrontiamo da molto tempo e da cui sono nati progetti interessantissimi dove ho avuto la possibilità di lavorare.

In effetti dare un nome per indicare solo questa branca di specializzazione ha senso. Forse il problema è che i DevOps sono un po’ visti come tuttofare, e in effetti non tutti i DevOps possono o vogliono sviluppare servizi di questo tipo.

Conclusioni

Credo che individuare una nuova area che comprenda un subset dei lavori che vengono al giorno d’oggi affidati a DevOps abbia senso: questa community emergente (Platform Enginnering) è sicuramente un trend da tenere d’occhio. Riporto un po’ di video interessanti che potete trovare qui: https://www.youtube.com/c/PlatformEngineering/videos

Tuttavia, il titolo dell’articolo to Thenewstack è chiaramente provocatorio perchè, da quanto ho capito, i Platform Engineers non sono in completa sovrapposizione con i DevOps. Per cui direi che l’accollo è stato schivato, posso lasciare lo stesso nome al blog!